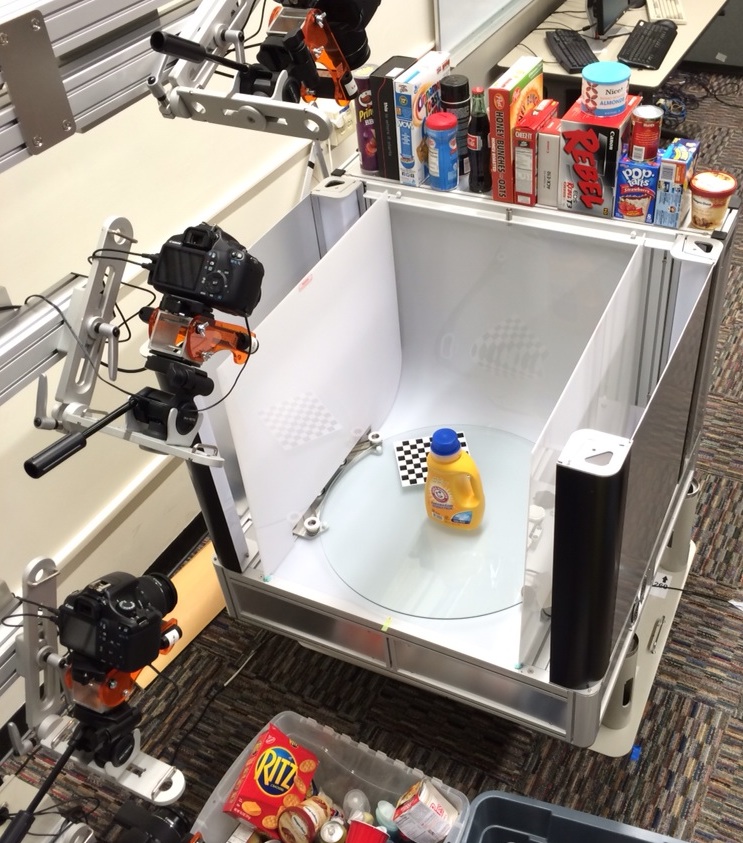

Here you can find data we have collected for the objects used in the

Amazon Picking Challenge. The data has been collected and processed using

the same system described in the ICRA 2014 publication

A Large-Scale 3D Database of Object Instances

and the ICRA 2015 publication

Range Sensor and Silhouette Fusion for High-Quality 3D Scanning.

Specifically, for each object, we provide:

- 600 12 megapixel images, sampling the viewing hemisphere

- 600 registered RGB-D point clouds from a Carmine 1.09 sensor

- Pose information for each of the above images and point clouds

- Segmentation masks for each of the above images (and segmented point clouds)

- Merged point clouds consisting of data from all 600 viewpoints

- Reconstructed meshes from the merged point clouds

Note that some objects, depending on their properties (e.g. transparency) may not have complete point clouds or meshes. We include them because the raw data and/or the partial meshes may still be useful. Specifically, first_years_take_and_toss_straw_cups, munchkin_white_hot_duck_bath_toy, and safety_works_safety_glasses have significantly below-average quality models.

You can access the data via this Google Drive link.

To immediately get started loading Kinbodies into OpenRAVE, you can use the files in 'kinbody.tgz' for each object.